Introduction to Machine Learning and Data Mining

Kyle I S Harrington / kyle@eecs.tufts.edu

Some slides adapted from Roni Khardon

Image from Alex Poon

Features

The input to a ML algorithm/model is composed features (aka attributes)

The output is a class or a value

The Black Box Delusion

Can we just take tons of measurements, feed them into our ML algorithm, and start making predictions?

Features

- More features generally slow ML algorithms down

- Irrelevant features can inhibit performance

What can we do about this?

Feature Selection

- Eliminate features

- Choose subsets of features that work well

- Build it into the ML algorithm

Instance Transformation

Our dataset $D$ has $N$ dimensions, for $N$ features

Instance transformations reduce the number of dimensions by transforming the features themselves

Instance Transformation

If we had a dataset with red, green, blue, and yellow features (N=4),

Then we might transform the dataset to $( red - green ) / (red + green)$ and $ (blue-yellow) / (blue + yellow)$

Principle Component Analysis

Principle component analysis (PCA) maps from one set of axes to orthogonal axes

Roughly speaking, project onto the axes of highest variation

Principle Component Analysis

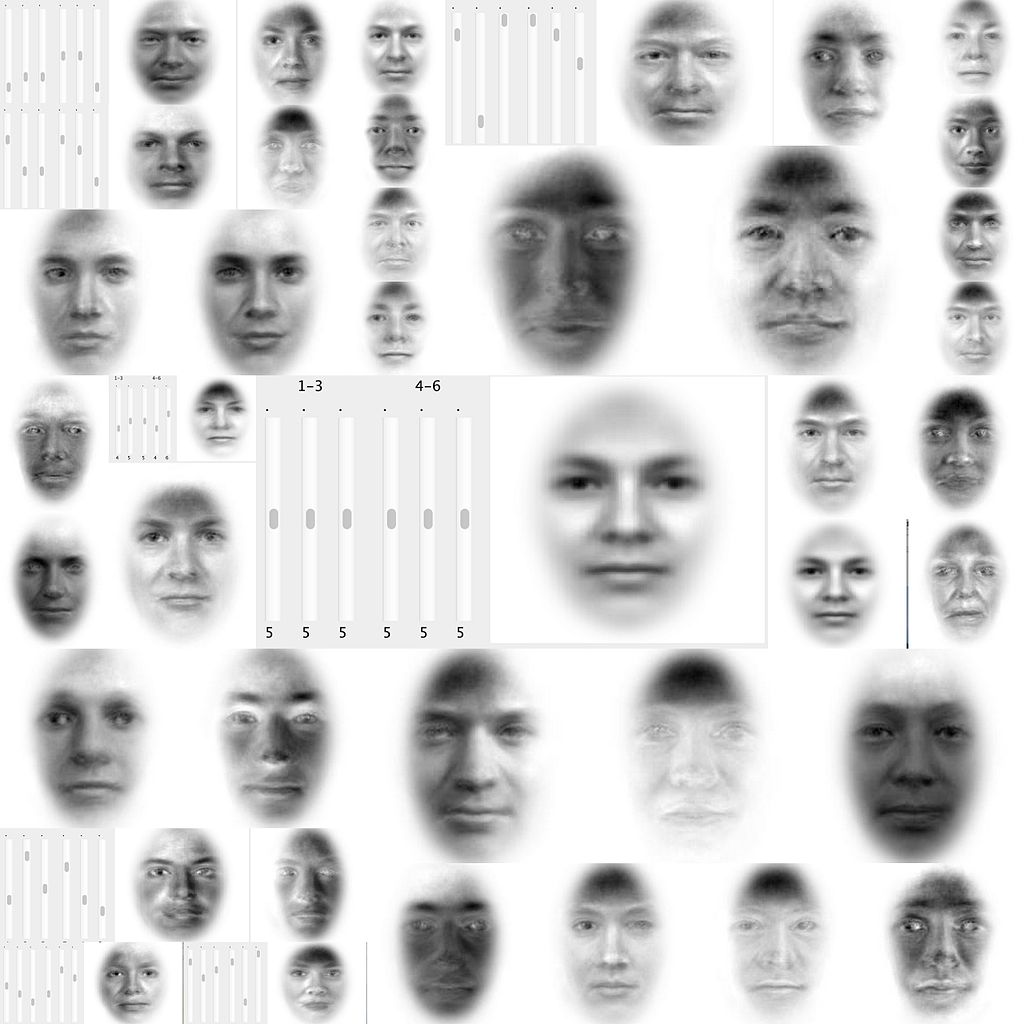

Eigenface reduces faces to low-dimensional space

| Bases | Generated faces |

|

|

Manifold Methods

Datasets can be thought of as manifolds

(Saul and Roweis, 2003)

(Saul and Roweis, 2003)Embed data in a low dimensional space, while preserving distance between points

Instance Transformation

Instance transformations reduce the number of dimensions by transforming the features themselves

Most methods of instance transformation are unsupervised

Filter Methods

Filter irrelevant features based upon the dataset

Filter Methods

- Assign a score to each feature with a heuristic

- Filter out useless features

Filter Methods

Ranking features based upon correlation between feature and class

$Rank(f) = \frac{ E[( X_f - \mu_{X_f} ) ( Y - \mu_Y ) ] }{ \sigma_{X_f} \sigma_Y }$

where $f$ is the feature of interest, and $Y$ is the class

Filter Methods

Mutual information between a feature and class

$Rank(f) = \displaystyle \sum_{X_f} \displaystyle \sum_Y p(X_f,Y) log \frac{ p(X_f,Y) }{ p(X_f) p(Y) }$

where $f$ is the feature of interest, and $Y$ is the class

Filter Methods

- Assign a score to each feature with a heuristic

- Filter out useless features

What issues could there be?

How necessary is this?

Filter Methods

Issues:

- How many features do we keep?

- Heuristics are only applied to 1 feature

Wrapper Method

- Select a subset of features

- Run a ML algorithm

- Use performance of ML algorithm to choose best subset

What is appealing about this?

Wrapper Method

Advantages of using validation sets

Features are tailored to ML algorithm

Considers different ways of combining features

Wrapper Method: Forward Selection

Start with subsets of only 1 feature

Grow subset of features by adding 1 new feature per iteration

Wrapper Method: Backward Elimination

Start with the full set of features

Eliminate 1 feature per iteration

Wrapper Method: Alternatives

Exhaustive search (consider all subsets)

Alternative AI methods (simulated annealing, genetic algorithms, ...)

Feature subset search is NP-hard

Comparing Filter and Wrapper Methods

Filtering: 1-step process, considers features independently

Wrapper: iterates through subsets of features, selects subset that matches ML algorithm

Feature Selection within ML Algorithm

When searching/optimizing, provide an incentive to be simple (sparse) by penalizing complexity

Will be covered later (L1 regularization)

Feature Preprocessing

The range of values for a given feature can impact an algorithm's performance

Remember using the value of the year directly on assignment 1?

Linear scaling

Scale the values into the range $[0,1]$

$x \leftarrow \frac{ x - x_{min} }{ x_{max} - x_{min} }

Scale based on training set only

Z-normalization

Scale the distribution to have mean=0 and std=1

$x \leftarrow \frac{ x - \mu_X }{ \sigma_X }

Scale based on training set only

Feature Discretization

Some algorithms only work on discrete features

We may need to discretize real-valued features

Feature Discretization

Calculate the histogram

This divides the values into bins

- Equal bin sizes

- Equal # of instances per bin

Feature Discretization

Alternatively, use a heuristic/ad-hoc method to discretize in a useful way

E.G. Build a decision tree, let the DT algorithm discretize, and use the split values of the optimized tree

From Discrete to Numerical

Some features are unordered (i.e. Browsers = [ Firefox, Chrome, Safari ])

Most common approach is to use unit vectors:

| Firefox | Chrome | Safari |

| 1 | 0 | 0 |

| 0 | 1 | 0 |

| 0 | 0 | 1 |

Quiz 1

Similar assignment 3

Covers: kNN, Decision trees, Naive Bayes, Measuring ML algorithms

What Next?

Quiz 1

Hands-on with Features